For almost a decade, the core Endeca MDEX engine that underpins Oracle Endeca Information Discovery (OEID) has supported one-time indexing (often referred to as a Baseline Update) as well as incremental updates (often referred to as partials). Through all of the incarnations of this functionality, from “partial update pipelines” to “continuous query”, there was one common limitation. Your update operations were always limited to act on “per-record” operations.

If you’re a person coming from a SQL/RDBMS background, this was a huge limitation and forced a conceptual change in the way that you think about data. Obviously, Endeca is not (and never was) a relational system but the freedom to update data whenever and where ever you please, that SQL provided, was often a pretty big limitation, especially at scale. Building an index nightly for 100,000 E-Commerce products is no big deal. Running a daily process to feed 1 million updated records into a 30 million record Endeca Server instance just so that a set of warranty claims could be “aged” from current month to prior month is something completely different.

Thankfully, with the release of the latest set of components for the ETL layer of OEID (called OEID Integrator), huge changes have been made to the interactions available for modifying an Endeca Server instance (now called a “Data Domain”). If you’ve longed for a “SQL-style experience” where records can be updated or deleted from a data store by almost any criteria imaginable, OEID Integrator v3.0 delivers.

Delete Where…

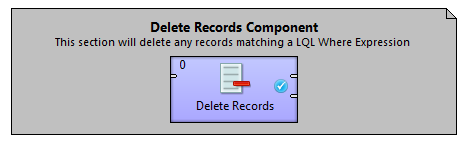

I’ve been on a number of projects over the past 5 years where the ability to define a set of records to be deleted from Endeca Server based on an attribute, or set of attributes would have been invaluable. There’s been invalid financial data in a Spend Analytics application skewing metrics, classified data that users had made visible in an Enterprise Search causing a security risk and lots more. With the new Delete component, eliminating this data from the index is a snap.

Let’s say I have an application to allow users to do Data Discovery on financial data from the last year. Every morning, I run an index update to add in the latest data from the previous day. However, I also want to “sunset” older data, say, anything older than 365 days. In previous versions of OEID, I would need to go to my index (or more likely, the system of record), pull the unique identifiers for the data, massage it so that I can generate the Endeca Record Specifier (the equivalent of a “primary key”) and load it into Endeca as a delete operation.

In version 3.0, I can just crack open the Delete component and specify an EQL Expression to identify those older records

And we’re done. I can see this being a huge help to customers that tend to “evolve” their index, appending and modifying data on the fly over time. It also opens up the possibility for applications that follow a more traditional “kill and fill” model to shift their architecture in this direction.

Update Where…

By the same token, the new update component opens up a whole new set of options when designing your data architecture. For applications that require “flagging” or aging style operations (navigate by current month, for example), the new Update functionality is a great fit. It supports Adding, Replacing and Deleting Assignments based on an incoming data feed and uses the same EQL mechanism for record identification.

Let’s say it’s April 1st (it’s really only 3 days away) and I want to take all of my March 2013 records and flip their “Current Month” attribute set to ‘No’. I create a one-line data file with the data I want to send into the index (this is a VERY simple example, you could have a number of attributes getting updated here):

I feed that into a Modify Records component and specify my EQL Record Set Specifier expression.

And, again, that’s it! I’ve now successfully flipped my March records to No. One quick note here is that the Modify component will also add attributes to your index if they don’t exist. In the above example, I actually ran my update against an index that didn’t include a CurrentMonth attribute for testing purposes and it worked great.

Hi,

We are using partial updates and Endeca 6.3 for our website and we can only run a few partials a day because it takes a while and each time the entire cache gets invalidated. How does this work here? I assume you actually do an update on the index served by a Dgraph. Is this applicable for more realtime updates without the Forge?

You are correct in that these are updates without a Forge process, which goes away as of OEID 2.x. In OEID 3.0 (the version of the MDEX is up to 7.5.1), there is no Forge process and the ways that you can push data in differ pretty substantially (direct socket connection for bulk add/replace, web services for more fine grained updates such as the new update/delete functionality discussed here). In the pre-Oracle days, I know there was a plan to platform both Commerce and OEID on MDEX 7 Series at some point, I’m not sure if that’s still the plan.

If you’re on 6.3, there’s definitely some things that can be done to tweak partial update impacts from an operational standpoint. There’s merge scheduling, cache flushing and ingesting, but it’s possible not all of those would apply, depending on your topology.

What percentage of the index would you say you’re updating with each partial? We’ve seen partial updates on lower versions of the MDEX (6.1.4) where a few hundred thousand updates can be handled (index size is 40 million records) relatively easily. Keep in mind, the Forge process for these was pretty lightweight so the bulk of the processing time occurs in the partial update being applied to the dgraph. If there was a long running forge AND a lot of updates for the dgraph to process, I could see this being pretty limiting.

Right now we handle about 40.000 updates each time (for an index of 14 mln products but with hundreds of mln of attributes) which we plan to increase to 60.000. At this point we have to take each Dgraph (4*) offline, update it and heat it up, and we do it one by one, this takes about 50 minutes from start to finish. The “continuous query” proposition does not work because the load is too high on our website. But we are looking for a more ‘real-time’ update mechanism.

But OIED is a different platform used for a different purpose so we’ll have to wait for a version where this is integrated.

Thanks for the response

I think the “grand vision” is still to have both products share a common code base. Or at the very least, to have some of the high value OEID enhancements ported over to the Commerce offering.

If you ever want to talk partial update performance, feel free to reach out. Your implementation definitely seems of the “optimize for latency/sacrifice 25% of throughput” which we see with high volume E-Commerce sites. There’s potentially some tricks that can be utilized to speed up the warm up and distribution pieces of your deployment script. You’ll still be limited by the fact that you have to use partial updates but I wonder if that 50 minutes could be improved…