We have been anticipating the intersection of big data with data discovery for quite some time. What exactly that will look like in the coming years is still up for debate, but we think Oracle’s new Big Data Discovery application provides a window into what true discovery on Hadoop might entail.

We’re excited about BDD because it wraps data analysis, transformation, and discovery tools together into a single user interface, all while leveraging the distributed computing horsepower of Hadoop.

BDD’s roots clearly extend from Oracle Endeca Information Discovery, and some of the best aspects of that application — ad-hoc analysis, fast response times, and instructive visualizations — have made it into this new product. But while BDD has inherited a few of OEID’s underpinnings, it’s also a complete overhaul in many ways. OEID users would be hard-pressed to find more than a handful of similarities between Endeca and this new offering. Hence, the completely new name.

The biggest difference of course, is that BDD is designed to run on the hottest data platform in use today: Hadoop. It is also cutting edge in that it utilizes the blazingly fast Apache Spark engine to perform all of its data processing. The result is a very flexible tool that allows users to easily upload new data into their Hadoop cluster or, conversely, pull existing data from their cluster onto BDD for exploration and discovery. It also includes a robust set of functions that allows users to test and perform transformations on their data on the fly in order to get it into the best possible working state.

In this post, we’ll explore a scenario where we take a basic spreadsheet and upload it to BDD for discovery. In another post, we’ll take a look at how BDD takes advantage of Hadoop’s distributed architecture and parallel processing power. Later on, we’ll see how BDD works with an existing data set in Hive.

We installed our instance of BDD on Cloudera’s latest distribution of Hadoop, CDH 5.3. From our perspective, this is a stable platform for BDD to operate on. Cloudera customers also should have a pretty easy time setting up BDD on their existing clusters.

Explore

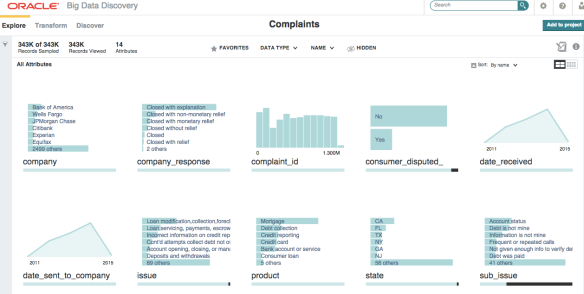

Getting started with BDD is relatively simple. After uploading a new spreadsheet, BDD automatically writes the data to HDFS, then indexes and profiles the data based on some clever intuition: What you see above displays just a little bit of the magic that BDD has to offer. This data comes from the Consumer Financial Protection Bureau, and details four years’ worth of consumer complaints to financial services firms. We uploaded the CSV file to BDD in exactly the condition we received it from the bureau’s website. After specifying a few simple attributes like the quote character and whether the file contained headers, we pressed “Done” and the application got to work processing the file. BDD then built the charts and graphs displayed above automatically to give us a broad overview of what the spreadsheet contained.

What you see above displays just a little bit of the magic that BDD has to offer. This data comes from the Consumer Financial Protection Bureau, and details four years’ worth of consumer complaints to financial services firms. We uploaded the CSV file to BDD in exactly the condition we received it from the bureau’s website. After specifying a few simple attributes like the quote character and whether the file contained headers, we pressed “Done” and the application got to work processing the file. BDD then built the charts and graphs displayed above automatically to give us a broad overview of what the spreadsheet contained.

As you can see, BDD does a good job presenting the data to us in broad strokes. Some of the findings we get right from the start are the names of the companies that have the most complaints and the kinds of products consumers are complaining about.

We can also explore any of these fields in more detail if we want to do so:

Now we get an even more detailed view of this date field, and can see how many unique values there are, or if there are any records that have data missing. It also gives us the range of dates in the data. This feature is incredibly helpful for data profiling, but we can go even deeper with refinements.

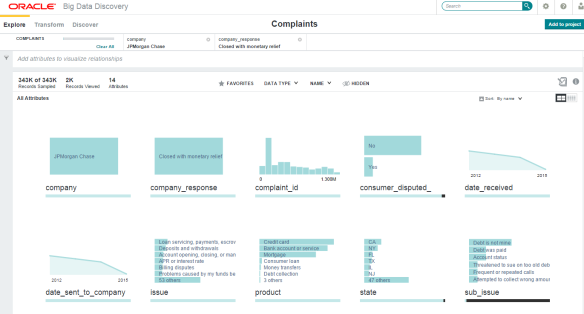

With just a few clicks on a couple charts, we have now refined our view of the data to a specific company, JPMorgan Chase, and a type of response, “Closed with monetary relief”. Remember, we have yet to clean or manipulate the data ourselves, but already we’ve been able to dissect it in a way that would be difficult to do with a spreadsheet alone. Users of OEID and other discovery applications will probably see a lot of familiar actions here in the way we are drilling down into the records to get a unique view of the data, but users who are unfamiliar with these kinds of tools should find the interface to be easy and intuitive as well.

Transform

Another way BDD differentiates itself from some other discovery applications is with the actions available under the “Transform” tab.

Within this section of the application, users have a wealth of common transformation options available to them with just a few clicks. Operations like converting data types, concatenating fields, and getting absolute values now can be done on the fly, with a preview of the results available in near real-time.

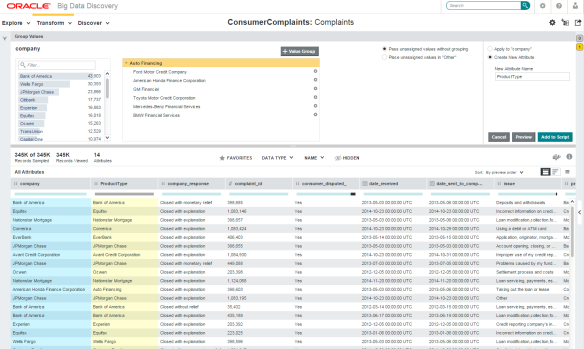

BDD also offers more complex transformation functions in its Transformation Editor, which includes features like date parsing, geocoding, HTML formatting and sentiment analysis. All of these are built-in to the application; no plug-ins required. Another nice feature BDD provides is an easy to way group (or bin) attributes by value. For example, we can find all the car-related financing companies and group them into a single category to refine by later on:

Another nice added feature of BDD is the ability to preview the results of a transform before committing the changes to all the data. This allows a user to fine tune their transforms with relative ease and minimal back and forth between data revisions.

Once we’re happy with our results, we can commit the transforms to the data, at which point BDD launches a Spark job behind the scenes to apply the changes. From this point, we can design a discovery interface that puts our enriched data set to work.

Discover

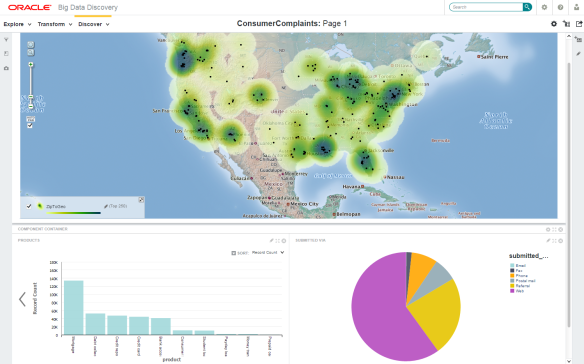

Included with BDD are a set of dynamic, advanced data visualizations that can turn any data set into something profoundly more intuitive and usable:

The image above is just a sampling of the kind of visual tools BDD has to offer. These charts were built in a matter of minutes, and because much of the ETL process is baked into the application, it’s easy to go back and modify your data as needed while you design the graphical elements. This style of workflow is drastically different from workflows of the past, which required the back- and front-ends to be constructed in entirely separate stages, usually in totally different applications. This puts a lot of power into the hands of users across the business, whether they have technical chops or not.

And as we mentioned earlier, since BDD’s indexing framework is a close relative to Endeca, it inherits all the same real-time processing and unstructured search capabilities. In other words, digging into your data is simple and highly responsive:

As more and more companies and institutions begin to re-platform their data onto Hadoop, there will be a growing need to effectively explore all of that distributed data. We believe that Oracle’s Big Data Discovery offers a wide range of tools to meet that need, and could be a great discovery solution for organizations that are struggling to make sense of the vast stores of information they have sitting on Hadoop.

If you would like to learn more, please contact us at info [at] ranzal.com.

Also be sure to stay tuned for Part 2!

Great article. I just started playing around BDD. I have a couple of quick questions, can I post them here? I don’t think there is an official BDD community as yet. Thanks

Sure! We’d be glad to take any questions you might have. There is also an Oracle Big Data Discovery group on LinkedIn if you’re interested in joining that.

Thanks Michael, this is an exciting new area in Big data analytics! I will check out the linkedIn community! I have two questions.

1) How hard or easy would it be for someone with little to no endeca experience to learn BDD and be able to implement projects?

2) (more of a technical question) I used cloudera’s cdh hadoop distribution on a oracle linux vm and installed BDD on top of it. The installation of hadoop and BDD were both successful. But when i try to upload an excel/csv file via studio, once i click browse->done the page just loads and loads and loads without taking me to the next page..I just have to close the browser tired of waiting. On the backend i dont see any errors (not really sure where exactly i need to look for errors). However, the hive tables(as verified on hue ui) have a new table created with the excel/csv file that I just uploaded. I’m just unable to see any data on the studio. I also tried uploading my data directly as a hive table via hue ui and ran DP CLI to bring that data to BDD studio, even there the CLI runs successfully but I don’t see any data on the BDD studio page. totally confused! would really appreciate any help in this regard.

Cluster info: 4 nodes with lots of memory and cores. Data file that im trying to upload is only 1000 rows

thanks,

Bob

Hi Bob,

To answer your first question, I think it would give the person a big head start if he/she had experience with Endeca but it’s not necessary. A lot of the transform and project-building tools in BDD are easy to use and don’t require the kind of technical ETL work in the back-end that Endeca requires. However, it would definitely an advantage if the person were familiar with basic scripting and SQL.

As far as your error goes, I would see how much memory you have configured for your Spark workers in Cloudera Manager. You want at least 24 GB and ideally 48 GB or more. This setting should match the setting you have allocated for Spark memory in BDD’s settings. Spark is memory-intensive, and even with small tables we have found that we need a large amount of memory to run jobs. You should be able to monitor Spark’s running applications at :18080. That might give you some clues whether this is the problem.

Hope that helps.

Best,

Michael

Thanks for those answers Michael, those were indeed helpful. The default spark worker memory in cloudera manager is 8gb, i let it stay like that and chaged the default value of 48g in the bdd.conf script to 7gb. Now I see(on spark ui page) that my applications are finished running(earlier they never finished running), however this time the applications are automatically killed and on the bdd studio page i get an error (while uploading excel) saying “error occurred..contact administrator”.

I was able to look at the stderr log on spark nodes and the studio log:

SPARK STDERR.LOG

15/03/25 04:17:22 ERROR Executor: Exception in task 0.3 in stage 2.0 (TID 9)

java.io.InvalidClassException: org.apache.spark.rdd.PairRDDFunctions; local class incompatible: stream classdesc serialVersionUID = 8789839749593513237, local class serialVersionUID = -4145741279224749316

at java.io.ObjectStreamClass.initNonProxy(ObjectStreamClass.java:617)

at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1622)

at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1517)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1771)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1350)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:1990)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:19

.

BDD-STUDIO.LOG

2015-03-25 04:17:36,543 ERROR [AppWizardPortlet] Error adding project namespacing to data set key

com.endeca.portal.data.DataSourceException: Could not execute config service request. This usually happens when an invalid config service request is made, or when a read only Server receives a config service request. Error message: Client received SOAP Fault from server: Collection ‘default_edp_d6b34fbc-9320-471a-91d3-a903a4ae5065’ does not exist. Please see the server log to find more detail regarding exact cause of the failure.

at com.endeca.portal.mdex.ConfigServiceUtil.executeUpdateOperation(ConfigServiceUtil.java:32)

at com.endeca.portal.data.DataSource.addCollectionName(DataSource.java:915)

at com.endeca.portlet.appwizard.AppWizardPortlet.serveResourceCheckDPOozieJobStatus(AppWizardPortlet.java:585)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606).

Do you still think it is a memory issue? should i necessarily have 24gb? im just trying to upload a 1mb excel file. Moreover I’m setting the ENABLE_HIVE_TABLE_DETECTOR as TRUE in the BDD script, and as per my understanding, when bdd is installed, the hive tables should automatically appear in studio. Still my studio is empty(although i uploaded a sample table in hive before installing bdd).

Appreciate your help. Thanks

Bob

It looks like this might require more in-depth troubleshooting. Please e-mail us at info [at] branchbird.com and we’ll see if we can help.

There is however one warning i see in the DGraph.out log. Not sure if this is the culprit

WARN 03/25/15 07:37:48.753 UTC (1427269068753) DGRAPH {dgraph} Exchange 53 returned “500 Internal Server Error”: (Exception occurred at conversation.xq:61:3-61:54: endeca-err:MDEX0001 : exception encountered while executing external function ‘internal:parse-eql’, caused by error endeca-err:MDEX0001 : Invalid input : Collection ‘SCONFIG_ENTITY_COLLECTION’ does not exist)

Stacktrace:

conversation.xq:61:3-61:54

Hi Bobby,

I am currently struggling with exactly the same issue (with the same warnings and spark errors) regarding uploading XLS/CSV files via BDD as well as directly importing them from Hive via CLI. BDD creates the data in Hive tables when I upload them but does not display them in the Data Sets Dashboard in BDD.

Have you been able to solve your problem? If so, I would be very interested in knowing how you fixed it.

Best regards,

Christopher

Interested to know if this was ever resolved as I have the exact same issue.

Pingback: Big Data Discovery – Custom Java Transformations Part 1 | Bird's Eye View

Do we have any update on the error -Client received SOAP Fault from server: Collection ‘default_edp_d6b34fbc-9320-471a-91d3-a903a4ae5065′ does not exist error.

How can we solve this?

The detailed error is

com.endeca.portal.data.DataSourceException: Could not execute config service request. This usually happens when an invalid config service request is

made, or when a read only Server receives a config service request. Error message: Client received SOAP Fault from server: Collection ‘default_edp_eb

763cff-1ff8-4513-98aa-c29176471871’ does not exist. Please see the server log to find more detail regarding exact cause of the failure.

at com.endeca.portal.mdex.ConfigServiceUtil.executeUpdateOperation(ConfigServiceUtil.java:32)

at com.endeca.portal.data.DataSource.addCollectionName(DataSource.java:915)

at com.endeca.portlet.appwizard.AppWizardPortlet.serveResourceCheckDPOozieJobStatus(AppWizardPortlet.java:585)

at sun.reflect.GeneratedMethodAccessor957.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at com.endeca.portlet.EndecaPortlet.serveResource(EndecaPortlet.java:796)

at com.sun.portal.portletcontainer.appengine.filter.FilterChainImpl.doFilter(FilterChainImpl.java:177)

at com.liferay.portal.kernel.portlet.PortletFilterUtil.doFilter(PortletFilterUtil.java:76)

at com.liferay.portal.kernel.servlet.PortletServlet.service(PortletServlet.java:100)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:844)